Data scientists typically want to minimise the error associated with their predictions. Certainly, pollsters want their opinion poll estimates immediately prior to an election to reflect the actual election outcome as closely as possible.

However, this is no easy task. With many predictive models, there is often a trade-off between the error associated with bias and the error associated with variance. In data science, and particularly in the domain of machine learning, this phenomenon is known as the

bias-variance trade-off or the bias-variance dilemma.

According to the bias-variance trade-off model, less than optimally tuned predictive models often either deliver high bias and low variance predictions, or the opposite: low bias and high variance predictions. At this stage, a chart might help with your intuition, and I will take a little time to define what I mean by bias and variance.

Two types of prediction error: bias and variance

Bias tells us how closely our predictions are typically, or on average, aligned with the true value for the population or the actual outcome. In terms of the above bullseye diagram, how closely do our predictions

on average land in the very centre of the bullseye?

Variance tells us about the variability in our predictions. It tells us how tightly our individual predictions cluster. In other words, variance indicates how far and wide our predictions are spread. Do they span a small range or a large range?

In our 2x2 bullseye grid above, a predictive model (or in the case of the 2019 opinion polls a system of predictive models) can deliver predictions with high or low variance and high or low bias.

In 2019, the opinion polls exhibited high bias and low variance

My contention is that collectively, the opinion polling in the six-week lead-up to the 2019 Australian Federal election exhibited high bias and low variance. It was in the top lefthand quadrant of the bulls-eye diagram above.

As evidence for this contention, I would note: Kevin Boneham has argued that by Australian standards, the opinion polls leading up to the 2019 election had their

biggest miss since the mid-1980s. Also, I have previously commented on the

extraordinarily low variance among the opinions polls prior to the 2019 election.

Changes in statistical practice and social realities

Before we come to the implications of the high bias/low variance polling outcome in 2019, it is worth reflecting how polling practice has been driven to change in recent times. In particular, I want to highlight the increased importance of data analytics in the production of opinion poll estimates.

Newspoll was famous for introducing an era of reliable and accurate polling in the Australian context. With the near-universal penetration of land-line telephones to Australian households by the 1980s, pollsters were able to develop sample frames that came close to the Holy Grail of giving every member of the Australian voting public an equal chance of being included in any survey the pollster run.

While pollsters came close, their sampling frames were not perfect, and weightings and adjustments were still needed for under-represented groups. Nonetheless, the extent to which weightings and analytics were needed to make up for shortfalls in the sample frame was small compared with today.

So what has changed since the mid-1980s? Quite a few things, here are but a few:

- the general population use of landlines has declined. In some cases, the plain old telephone system (POTS) has been replaced by a voice over internet protocol (VOIP) service. Many households have terminated their landline service altogether, in favour of the mobile phone. Younger people in share houses are very unlikely to have a shared telephone landline or even a VOIP service. Further, mobile and VOIP numbers are not listed in the White Pages by default. As a consequence, constructing a telephone-based sample frame is much more challenging than it once was.

- the rise of the digital technologies has seen a growth in robocalls, which many people find annoying. Caller identification on phones has given rise to the practice of call screening. If a polling call is taken, busy people and those not interested in politics often just hang up. As a result of these and other factors, participation rates in telephone polls have plummeted. In the United States, Pew Research reported a decline in telephone survey responses from 36 per cent in 1997 to just 6 per cent in 2018. Newspoll's decision to abandon telephone polling suggests something similar may have happened in Australia

- the newspapers that purchase much of the publicly available opinion polling have had their budgets squeezed, and they are wanting to spend less on polling. Ironically, lower telephone survey response rates (as noted above) are driving up the costs of telephone surveys. As a consequence, pollsters have had to turn to lower-cost (and more challenging) sampling techniques, such as internet surveys.

- While more people may be online these days (as Newspoll argues), online participation remains less widespread than landlines in the mid-1980s.

Because of the above, pollsters need to work much harder to address the short-comings in their sampling frame. Pollsters need to make far more data-driven adjustments to today's raw poll results than happened in the mid-1980s. This work is far more complex than simply weighting for sub-cohort population shares. Which brings us back to the bias-variance trade-off.

What the bias-variance trade-off outcome suggests

The error for a predictive model can be thought of as the sum of the squared differences between the true value and all of the predicted values from the model

$$Error = \sum(predicted_y - true_y)^2$$

The critical insight from the bias-variance trade-off is that this error can be decomposed into three components:

$$Error = Bias^2 + Variance + Irreducible Error$$

We have already discussed bias and variance. The irreducible error is that error that cannot be removed by developing a better predictive model. All predictive models will have some noise-related error.

Simple models tend to underfit the data. They do not capture enough of the information or "signal" available in the data. As a result, they tend to have low variance, but high bias.

Complex models tend to overfit the data. Because they typically have many parameters, they tend to treat noise in the data as the signal. This tends to result in predictive models that have low bias but high variance (driven by the noise in the data).

Again, to help develop our intuition (and to avoid the complex mathematics), let's turn to a visual aid to get a sense of what I mean by under-fit and over-fit a predictive model to the data.

The data below (the red dots) comes from a randomly perturbed sine wave between 0 and \(\pi\). I have three models that look at those red dots (the perturbed data) and try to predict the form or shape of the underlying curve:

- a simple linear regression - which predicts a straight line

- a quadratic regression - which predicts a parabola

- a high-order polynomial regression - which can predict quite a complicated line

The linear regression (the straight blue line, top left) provides an under-fitted model for the curve evident in the data. The quadratic regression (the inverted parabola, bottom left) provides a reasonably good model. It is the closest of the models to a sine wave between the values of 0 and \(\pi\). And the polynomial regression of degree 25 (the squiggly blue line, bottom right) over-fits the data and reflects some of the random noise introduced by the perturbation process.

The next chart compares the three blue models above, with the actual sine wave before the perturbation. The closer the blue line is to the red line, the less error the model has.

The model with the least error between the predicted line and the sine curve is the parabola. The straight line and the squiggly line both have differences with the actual sine curve for most of their length.

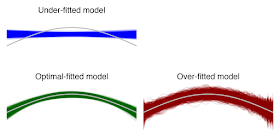

When we run these three predictive models many times we can see something about the type of error for these three models. In the next chart, we have generated a randomly perturbed set of points 1000 times (just like we did above) and asked each of the models to predict the underlying line 1000 times. Each of the predictions from the 1000 model runs has then been plotted on the next chart. For reference, the sine curve the models are trying to predict is over-plotted in light-grey.

Looking at the multiple runs, the bias and variance for the distribution of predictions from the three models become clear:

- If we look at the blue linear model in the top left, we see high bias (the straight-line predictions from this model have significant points of deviation from the sine curve). But the 1000 model predictions are tightly clustered showing low variance across the predictions. The distribution of predictions from the under-fitted model shows high bias and low variance.

- The green quadratic model in the bottom left exhibits low bias. The 1000 model predictions follow closely to the sine curve. They are also tightly clustered showing low variance. The distribution of predictions from the optimally-fitted model shows low bias and low variance. These predictions have the least total error.

- The

red high-order polynomial model in the bottom right exhibits low bias:

on average, the 1000 predictions are close to the sine curve. However, the multiple runs exhibit quite a spread of predictions compared with the other two models. The distribution of predictions from the over-fitted model shows a low bias, but high variance.

Coming back to the three-component cumulative error equation above, we can see the least total error occurs at an optimal point between a simple and complex model. Again, let's use a stylised chart to aid intuition.

As model complexity increases, the prediction error associated with bias decreases, but the prediction error from variance increases. This minimal total error sweet-spot (between simplicity and complexity) is the key insight of the bias-variance trade-off model. This is the best trade-off between the error from prediction bias and the error from prediction variance.

So what does this mean for the polls before the 2019 election?

The high bias and low variance in the 2019 poll results suggest that collectively the pollsters had not done enough to ensure their polling models produced optimal election outcome predictions. In short, their predictive models were not complex enough to adequately overcome the sampling frame problems (noted above) that they were wrestling with.

Without transparency from the pollsters, it is hard to know specifically where the pollsters' approach lacked the necessary complexity.

Nonetheless, I have wondered to what extent the pollsters use each other (either purposefully or through confirmation bias) to benchmark their predictions and adjust for the (perceived) bias that arises from issues with their sampling frames. In effect, mutual cross-validation, should it be occurring, would effectively simplify their collective models.

I have also wondered whether the pollsters look at non-linearity effects between their sample frame and changes in voting intention in the Australian public. If the samples that the pollsters are working with are more educated and more rusted on to a political party on average, their response to changing community sentiment may be muted compared with the broader community. For example, a predictive model that has been validated when community sentiment is at (say) 47 or 48 per cent, may not be reliable when community sentiment moves to 51 per cent.

I have wondered whether the pollsters built rolling averages or some other smoothing technique into their predictive models. Again, if so, this could be a source of simplicity.

I have wondered whether the pollsters are cycling through the same survey respondents over and over again. Again, if so, this could be a source of simplicity.

I have many questions as to what might have gone wrong in 2019. The bias-variance trade-off model provides some suggestion as to what the nature of the problem might have been.

Further reading