Note: the following page is the approach I took to the 2016 Federal Election. The Ba

yesian Aggregation page is being/has been updated for the 2019 election ...

This page provides some technical background on the Bayesian poll

aggregation models used on this site. The page has been updated for the

2016 Federal election.

General overview

The aggregation or

data fusion models I use are known as

hidden Markov models. They are are sometimes referred to as

state space models or latent process models.

I

model the national voting intention (which cannot be observed directly;

it is "hidden") for each and every day of the period under analysis.

The only time the national voting intention is not hidden, is at an

election. In some models (known as anchored models), we use the election

result to anchor the daily model we use.

In the

language of modelling, our estimates of the national voting intention

for each day being modeled are known as states. These "states" link

together to form a

Markov process,

where each state is directly dependent on the previous state and a

probability distribution linking the states. In plain English, the

models assume that the national voting intention today is much like it

was yesterday. The simplest models assume the voting intention today is

normally

distributed around the voting intention yesterday.

The

model is informed by irregular and noisy data from the selected polling

houses. The challenge for the model is to ignore the noise and find the

underlying signal. In effect, the model is solved by finding the the

day-to-day pathway with the

maximum likelihood given the known poll results.

To

improve the robustness of the model, we make provision for the long-run

tendency of each polling house to systematically favour either the

Coalition or Labor. We call this small tendency to favour one side or

the other a "house effect". The model assumes that the results from each

pollster diverge

(on average) from the from real population voting intention by a small,

constant number of

percentage points. We use the calculated house effect to adjust the raw

polling data from each polling house.

In estimating the house effects, we can take one of a number of approaches. We could:

- anchor the model to an election result on a particular day, and use that anchoring to establish the house effects.

- anchor the model to a particular polling house or houses; or

- assume that collectively the polling houses are unbiased, and that collectively their house effects sum to zero.

Typically, I use the first and third approaches in my

models. Some models assume that the house effects sum to zero. Other

models assume that the house effects can be determined absolutely by

anchoring the hidden model for a particular day or week to a known

election outcome.

There are issues with both

approaches. The problem with anchoring the model to an election outcome

(or to a

particular polling house), is that pollsters are constantly reviewing

and, from time to time, changing their polling practice. Over time these

changes affect the reliability of the model. On the other hand, the

sum-to-zero assumption is rarely correct. Of the two approaches,

anchoring tends to perform better.

Another adjustment

we make is to allow discontinuities in the

hidden process when either party changes its leadership. Leadership

changes can see immediate changes in the voting preferences. For the

anchored models, we allow for discontinuities with the Gillard to Rudd

and Abbott to Turnbull leadership changes. The unanchored models have a

single discontinuity with the Abbott to Turnbull leadership change.

Solving a model necessitates integration over a series of complex

multidimensional probability distributions. The definite integral is

typically impossible to solve algebraically. But it can be solved using a

numerical method based on Markov chains and random numbers known as

Markov Chain Monte Carlo (MCMC) integration.

I use a

free software product called

JAGS to solve these models.

The dynamic linear model of TPP voting intention with house effects summed to zero

This is the simplest model. It has three parts:

- The observational part of the model assumes two factors

explain the difference between published poll results (what we observe)

and the national voting intention on a particular day (which, with the

exception of elections, is hidden):

- The first factor is the margin of error from classical statistics. This is the random error associated with selecting a sample; and

- The second factor is the systemic biases (house effects) that affect each pollster's published estimate of the population voting intention.

- The temporal part of the model assumes that the actual

population voting intention on any day is much the same as it was on the

previous day (with the exception of discontinuities). The model

estimates the (hidden) population voting intention for every day under

analysis.

- The house effects part of the model assumes that house effects are distributed around zero and sum to zero.

This model builds on original work by

Professor Simon Jackman. It is encoded in

JAGS as follows:

model {

## -- draws on models developed by Simon Jackman

## -- observational model

for(poll in 1:n_polls) {

yhat[poll] <- houseEffect[house[poll]] + hidden_voting_intention[day[poll]]

y[poll] ~ dnorm(yhat[poll], samplePrecision[poll]) # distribution

}

## -- temporal model - with one discontinuity

hidden_voting_intention[1] ~ dunif(0.3, 0.7) # contextually uninformative

hidden_voting_intention[discontinuity] ~ dunif(0.3, 0.7) # ditto

for(i in 2:(discontinuity-1)) {

hidden_voting_intention[i] ~ dnorm(hidden_voting_intention[i-1], walkPrecision)

}

for (j in (discontinuity+1):n_span) {

hidden_voting_intention[j] ~ dnorm(hidden_voting_intention[j-1], walkPrecision)

}

sigmaWalk ~ dunif(0, 0.01)

walkPrecision <- pow(sigmaWalk, -2)

## -- house effects model

for(i in 2:n_houses) {

houseEffect[i] ~ dunif(-0.15, 0.15) # contextually uninformative

}

houseEffect[1] <- -sum( houseEffect[2:n_houses] )

}

Professor Jackman's original JAGS code can be found in the file

kalman.bug, in the zip file link

on this page, under the heading

Pooling the Polls Over an Election Campaign.

The anchored dynamic linear model of TPP voting intention

This

model is much the same as the previous model. However, it is run with

data from prior to the 2013 election to anchor poll performance. It

includes two discontinuities, with the ascensions of Rudd and Turnbull.

And, because it is anchored, the house effects are not constrained to

sum to zero.

model {

## -- observational model

for(poll in 1:n_polls) {

yhat[poll] <- houseEffect[house[poll]] + hidden_voting_intention[day[poll]]

y[poll] ~ dnorm(yhat[poll], samplePrecision[poll]) # distribution

}

## -- temporal model - with two or more discontinuities

# priors ...

hidden_voting_intention[1] ~ dunif(0.3, 0.7) # fairly uninformative

for(j in 1:n_discontinuities) {

hidden_voting_intention[discontinuities[j]] ~ dunif(0.3, 0.7) # fairly uninformative

}

sigmaWalk ~ dunif(0, 0.01)

walkPrecision <- pow(sigmaWalk, -2)

# Up until the first discontinuity ...

for(k in 2:(discontinuities[1]-1)) {

hidden_voting_intention[k] ~ dnorm(hidden_voting_intention[k-1], walkPrecision)

}

# Between the discontinuities ... assumes 2 or more discontinuities ...

for( disc in 1:(n_discontinuities-1) ) {

for(k in (discontinuities[disc]+1):(discontinuities[(disc+1)]-1)) {

hidden_voting_intention[k] ~ dnorm(hidden_voting_intention[k-1], walkPrecision)

}

}

# after the last discontinuity

for(k in (discontinuities[n_discontinuities]+1):n_span) {

hidden_voting_intention[k] ~ dnorm(hidden_voting_intention[k-1], walkPrecision)

}

## -- house effects model

for(i in 1:n_houses) {

houseEffect[i] ~ dnorm(0, pow(0.05, -2))

}

}

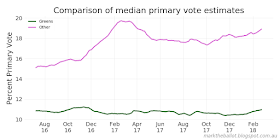

The latent Dirichlet process for primary voting intention

This model is more complex. It takes advantage of the Dirichlet (pronounced

dirik-lay)

distribution, which always sums to 1, just as the primary votes for all

parties would sum to 100 per cent of voters at an election. A weakness

is a transmission mechanism from day-to-day that uses a "tightness of

fit" parameter, which has been arbitrarily selected.

The

model is set up a little differently to the previous models. Rather

than pass the vote share as a number between 0 and 1; we pass the size

of the sample that indicated a preference for each party. For example,

if the poll is of 1000 voters, with 40 per cent for the Coalition, 40

per cent for Labor, 11 per cent for the Greens and and 9 per cent for

Other parties, the multinomial we would pass across for this poll is

[400, 400, 110, 90].

More broadly, this model is

conceptually very similar to the sum-to-zero TPP model: with an

observational component, a dynamic walk of primary voting proportions

(modeled as a

hierarchical Dirichlet process), a

discontinuity for Turnbull's ascension, and a set of house effects that

sum to zero across polling houses and across the parties.

data {

zero <- 0.0

}

model {

#### -- observational model

for(poll in 1:NUMPOLLS) { # for each poll result - rows

adjusted_poll[poll, 1:PARTIES] <- walk[pollDay[poll], 1:PARTIES] +

houseEffect[house[poll], 1:PARTIES]

primaryVotes[poll, 1:PARTIES] ~ dmulti(adjusted_poll[poll, 1:PARTIES], n[poll])

}

#### -- temporal model with one discontinuity

tightness <- 50000 # kludge - today very much like yesterday

# - before discontinuity

for(day in 2:(discontinuity-1)) {

# Note: use math not a distribution to generate the multinomial ...

multinomial[day, 1:PARTIES] <- walk[day-1, 1:PARTIES] * tightness

walk[day, 1:PARTIES] ~ ddirch(multinomial[day, 1:PARTIES])

}

# - after discontinuity

for(day in discontinuity+1:PERIOD) {

# Note: use math not a distribution to generate the multinomial ...

multinomial[day, 1:PARTIES] <- walk[day-1, 1:PARTIES] * tightness

walk[day, 1:PARTIES] ~ ddirch(multinomial[day, 1:PARTIES])

}

## -- weakly informative priors for first and discontinuity days

for (party in 1:2) { # for each major party

alpha[party] ~ dunif(250, 600) # majors between 25% and 60%

beta[party] ~ dunif(250, 600) # majors between 25% and 60%

}

for (party in 3:PARTIES) { # for each minor party

alpha[party] ~ dunif(10, 250) # minors between 1% and 25%

beta[party] ~ dunif(10, 250) # minors between 1% and 25%

}

walk[1, 1:PARTIES] ~ ddirch(alpha[])

walk[discontinuity, 1:PARTIES] ~ ddirch(beta[])

## -- estimate a Coalition TPP from the primary votes

for(day in 1:PERIOD) {

CoalitionTPP[day] <- sum(walk[day, 1:PARTIES] *

preference_flows[1:PARTIES])

CoalitionTPP2010[day] <- sum(walk[day, 1:PARTIES] *

preference_flows_2010[1:PARTIES])

}

#### -- house effects model with two-way, sum-to-zero constraints

## -- vague priors ...

for (h in 2:HOUSECOUNT) {

for (p in 2:PARTIES) {

houseEffect[h, p] ~ dunif(-0.1, 0.1)

}

}

## -- sum to zero - but only in one direction for houseEffect[1, 1]

for (p in 2:PARTIES) {

houseEffect[1, p] <- 0 - sum( houseEffect[2:HOUSECOUNT, p] )

}

for(h in 1:HOUSECOUNT) {

# includes constraint for houseEffect[1, 1], but only in one direction

houseEffect[h, 1] <- 0 - sum( houseEffect[h, 2:PARTIES] )

}

## -- the other direction constraint on houseEffect[1, 1]

zero ~ dsum( houseEffect[1, 1], sum( houseEffect[2:HOUSECOUNT, 1] ) )

}

The anchored Dirichlet primary vote model

The anchored Dirichlet

model follows. It draws on elements from the anchored TPP model and

Dirichlet model above. It is the most complex of these models. It also

takes the longest time to produce reliable results. For this model, I

run 460,000 samples, taking every 23rd sample for analysis. On my aging

Apple iMac and JAGS 4.0.1 it takes around 100 minutes to run.

model {

#### -- observational model

for(poll in 1:NUMPOLLS) { # for each poll result - rows

adjusted_poll[poll, 1:PARTIES] <- walk[pollDay[poll], 1:PARTIES] +

houseEffect[house[poll], 1:PARTIES]

primaryVotes[poll, 1:PARTIES] ~ dmulti(adjusted_poll[poll, 1:PARTIES], n[poll])

}

#### -- temporal model with multiple discontinuities

# - tightness of fit parameters

tightness <- 50000 # kludge - today very much like yesterday

# Up until the first discontinuity ...

for(day in 2:(discontinuities[1]-1)) {

multinomial[day, 1:PARTIES] <- walk[day-1, 1:PARTIES] * tightness

walk[day, 1:PARTIES] ~ ddirch(multinomial[day, 1:PARTIES])

}

# Between the discontinuities ... assumes 2 or more discontinuities ...

for( disc in 1:(n_discontinuities-1) ) {

for(day in (discontinuities[disc]+1):(discontinuities[(disc+1)]-1)) {

multinomial[day, 1:PARTIES] <- walk[day-1, 1:PARTIES] * tightness

walk[day, 1:PARTIES] ~ ddirch(multinomial[day, 1:PARTIES])

}

}

# After the last discontinuity

for(day in (discontinuities[n_discontinuities]+1):PERIOD) {

multinomial[day, 1:PARTIES] <- walk[day-1, 1:PARTIES] * tightness

walk[day, 1:PARTIES] ~ ddirch(multinomial[day, 1:PARTIES])

}

# weakly informative priors for first day and discontinutity days ...

for (party in 1:2) { # for each minor party

alpha[party] ~ dunif(250, 600) # minors between 25% and 60%

}

for (party in 3:PARTIES) { # for each minor party

alpha[party] ~ dunif(10, 200) # minors between 1% and 20%

}

walk[1, 1:PARTIES] ~ ddirch(alpha[])

for(j in 1:n_discontinuities) {

for (party in 1:2) { # for each minor party

beta[j, party] ~ dunif(250, 600) # minors between 25% and 60%

}

for (party in 3:PARTIES) { # for each minor party

beta[j, party] ~ dunif(10, 200) # minors between 1% and 20%

}

walk[discontinuities[j], 1:PARTIES] ~ ddirch(beta[j, 1:PARTIES])

}

## -- estimate a Coalition TPP from the primary votes

for(day in 1:PERIOD) {

CoalitionTPP[day] <- sum(walk[day, 1:PARTIES] *

preference_flows[1:PARTIES])

}

#### -- sum-to-zero constraints on house effects

for(h in 1:HOUSECOUNT) {

for (p in 2:PARTIES) {

houseEffect[h, p] ~ dnorm(0, pow(0.05, -2))

}

}

# need to lock in ... but only in one dimension

for(h in 1:HOUSECOUNT) { # for each house ...

houseEffect[h, 1] <- -sum( houseEffect[h, 2:PARTIES] )

}

}

Beta model of primary vote share for Palmer United

A

simplification of the Dirichlet distribution is the Beta distribution. I

use the Beta distribution (in a similar model to the Dirichlet model

above), to track the primary vote share of the Palmer United Party. This

model does not provide for a discontinuity in voting associated with

the change of Prime Minister.

model {

#### -- observational model

for(poll in 1:NUMPOLLS) { # for each poll result - rows

adjusted_poll[poll] <- walk[pollDay[poll]] + houseEffect[house[poll]]

palmerVotes[poll] ~ dbin(adjusted_poll[poll], n[poll])

}

#### -- temporal model (a daily walk where today is much like yesterday)

tightness <- 50000 # KLUDGE - tightness of fit parameter selected by hand

for(day in 2:PERIOD) { # rows

binomial[day] <- walk[day-1] * tightness

walk[day] ~ dbeta(binomial[day], tightness - binomial[day])

}

## -- weakly informative priors for first day in the temporal model

alpha ~ dunif(1, 1500)

walk[1] ~ dbeta(alpha, 10000-alpha)

#### -- sum-to-zero constraints on house effects

for(h in 2:HOUSECOUNT) { # for each house ...

houseEffect[h] ~ dnorm(0, pow(0.1, -2))

}

houseEffect[1] <- -sum(houseEffect[2:HOUSECOUNT])

}

Code and data

I have made most of my data and code base available on

Google Drive.

Please note, this is my live code base, which I play with quite a bit.

So, there will be times when it is broken or in some stage of being

edited.

What I have not made available is the Excel

spreadsheets into which I initially place my data. These live in the

(hidden) raw-data directory. However, the collated data for the Bayesian

model lives in the intermediate directory, visible from the above link.