Updated on 25 October and 2 November 2023:

Going into the the Voice Referendum, collectively the polls suggested that the referendum would be lost. There was not one poll predicting a win in the last couple of months before the referendum. This was a win for polling.

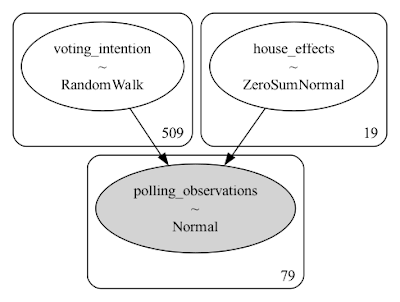

Using a Bayesian technique, we can pool the polls. The technique assumes that the voting intention on one day is much like the day before. We can only know the actual voting intention on referendum day. Prior to the referendum, the model assumes the voting intention broadly tracks the opinion polling. The model also assumes that each pollster has an inherent bias. This bias is referred to as an house effect. This is not to suggest that any pollster is deliberately biased. Rather, the bias comes about from systemic factors such as how individual pollsters select and interview their sample, how results are weighted, and so on. However, collectively, this model assumes these biases cancel out (they sum to zero).

The pooled polls (assuming that individual pollster bias cancelled out) predicted the yes vote would be around 43.4 per cent immediately before the referendum.

As it turned out, this was optimistic. It is now a few weeks since the Voice Referendum was lost. While the count is still progressing, this afternoon (2 Nov) it stood at 39.94% for Yes and 60.06% for No. It is likely that the final count will not differ substantially from this result.

From the house effects chart immediately above, we can see that (over the entire period under analysis) many pollsters had zero bias within their 95% HDI on average. Nonetheless, some pollsters appear to have over-estimated the yes vote by up to 15 percentage points on average, or underestimated it by around 7 percentage points.

The notebook for this analysis can be found here. The data for the analysis came from Wikipedia.

For another perspective see Kevin Boneham.

No comments:

Post a Comment